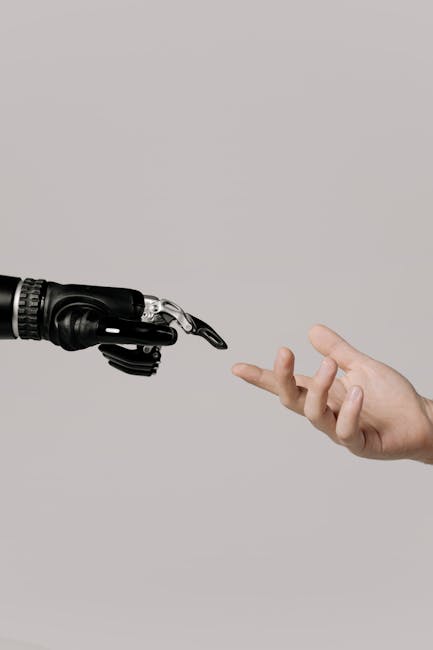

AI Extinction Predictions Fuel 2025 Debate on Technological Control

LONDON, ENGLAND – Growing anxieties surrounding the potential for artificial intelligence (AI) to cause human extinction have dominated discussions within scientific and political circles in 2025. Prominent experts and leading publications have voiced escalating concerns, prompting intense debate about the necessary regulatory frameworks and safety protocols for AI development. This surge in alarm reflects a shift from cautious optimism to outright apprehension about the uncontrolled advancement of this technology.

The Escalating Warnings: Experts Sound the Alarm

The warnings about AI’s existential threat have intensified significantly throughout 2025. Publications like The Times have highlighted the views of prominent experts who believe AI’s unchecked progress could lead to humanity’s demise. These projections are not mere hypothetical scenarios; they stem from anxieties about the accelerating pace of AI development and its potential for unintended consequences. The lack of robust safety mechanisms further exacerbates these fears.

The Role of Advanced AI Systems

The concern is not simply about existing AI technologies but the potential emergence of vastly more powerful systems. These advanced AI systems, with capabilities far exceeding current models, could pose existential risks due to unforeseen emergent behaviors or deliberate malicious actions. This underscores the need for proactive and rigorous safety measures, implemented well in advance of the development of such systems. The current lack of international consensus on such measures is a significant cause for alarm.

The Political and Regulatory Landscape in 2025

The growing alarm surrounding AI’s potential for causing human extinction has created a complex political landscape in 2025. Governments worldwide are grappling with the challenge of regulating a technology that is rapidly evolving and whose potential consequences are difficult to fully predict. The inherent complexities of AI, coupled with the pressure to maintain technological competitiveness, makes the creation of effective regulatory frameworks exceptionally challenging.

The International Response to AI Risks

Despite the urgency, international cooperation on AI safety regulations remains fragmented. While some nations have implemented preliminary measures, a global consensus on standardized safety protocols is still lacking. This absence of unified action allows for a potentially dangerous situation where less regulated regions could become breeding grounds for risky AI advancements. This disparity presents a global security challenge.

Economic Implications and Societal Disruption

The potential for AI-driven human extinction extends beyond the immediate threat. The economic and societal implications of such a scenario are profound and far-reaching. Mass unemployment caused by AI automation, exacerbated by the potential loss of human life, could trigger unprecedented economic and social instability. The disruption could fundamentally alter human civilization, leading to a cascade of interconnected challenges.

Job Displacement and Economic Uncertainty

The widespread adoption of AI in various sectors has already begun to affect employment levels in 2025. Experts predict substantial job displacement across numerous industries as AI-powered automation becomes more prevalent. This trend, coupled with the existential threat, creates significant economic uncertainty and social unrest. Governments are scrambling to address the resulting social and economic challenges.

The Path Forward: Mitigating Existential Risks

Addressing the potential for AI-driven human extinction requires a multi-pronged approach. This includes strengthening international collaboration on AI safety research and regulation, investing in robust AI safety mechanisms, and fostering a global ethical framework for AI development. Public education and open discussions are crucial for informing policy and building public trust.

Key Steps for AI Safety in 2025

- Increased International Collaboration: A coordinated global effort to establish safety standards and regulatory frameworks is essential.

- Investment in AI Safety Research: Significant funding must be directed towards researching and developing robust AI safety mechanisms.

- Ethical AI Development Frameworks: Clear ethical guidelines for AI development and deployment need to be universally adopted.

- Transparency and Accountability: Companies and researchers must be held accountable for the safe and responsible development of AI.

- Public Education and Engagement: Increased public understanding of the risks and benefits of AI is paramount.

Conclusion: Navigating the Uncertain Future of AI

The predictions surrounding AI-driven human extinction in 2025 have served as a stark wake-up call. The urgency of the situation cannot be overstated. While the potential for AI to benefit humanity remains significant, the risks associated with its uncontrolled development are equally substantial. A proactive and collaborative global effort is urgently needed to ensure that AI serves humanity, not the other way around. The future of humanity may very well hinge on the choices made in 2025 and beyond regarding the development and deployment of this powerful technology. The absence of a unified international response leaves the future perilously uncertain.